This blog presents a Gen AI assisted content creation solution powered by the Nvidia H200 GPUs on Dell PowerEdge XE9680 servers.

Enterprises struggle with efficient and consistent content creation across their documentation needs, particularly in time-sensitive technical marketing and product launches. Traditional content generation processes are resource-intensive and often delay crucial market announcements, requiring significant manual effort to gather information, maintain brand consistency, and ensure technical accuracy. The time lag in creating newsletters and updating product documentation not only impacts market momentum but also consumes valuable engineering time that could be better spent on strategic initiatives. While organizations possess extensive historical content and documentation, they lack efficient tools to leverage this existing knowledge for rapid, timely content creation, often missing critical launch windows and market opportunities.

To address these obstacles in content creation for enterprises, we have developed a RAG-based Content Creation solution powered by the Dell PowerEdge XE9680 server with NVIDIA H200 GPUs, designed to enable enterprises to automate these content workflows while maintaining enterprise security and utilizing existing content repositories. For enterprises struggling with content bottlenecks, maintaining brand consistency, or efficiently utilizing their existing knowledge base, this solution significantly reduces content creation time while ensuring quality and accuracy.

In this blog, we explore the solution architecture and demonstrate:

- How RAG-based content generation intelligently leverages your reference documents and visual assets to create content

- How to deploy advanced language and embeddings models on the Dell PowerEdge XE9680, with support for Broadcom Ethernet Adapters (up to 200 GbE), along with eight NVIDIA H200 GPUs for enterprise-scale content generation

- How customizable newsletter and product launch templates enable rapid content creation while maintaining brand consistency and factual accuracy

| Hardware Selection

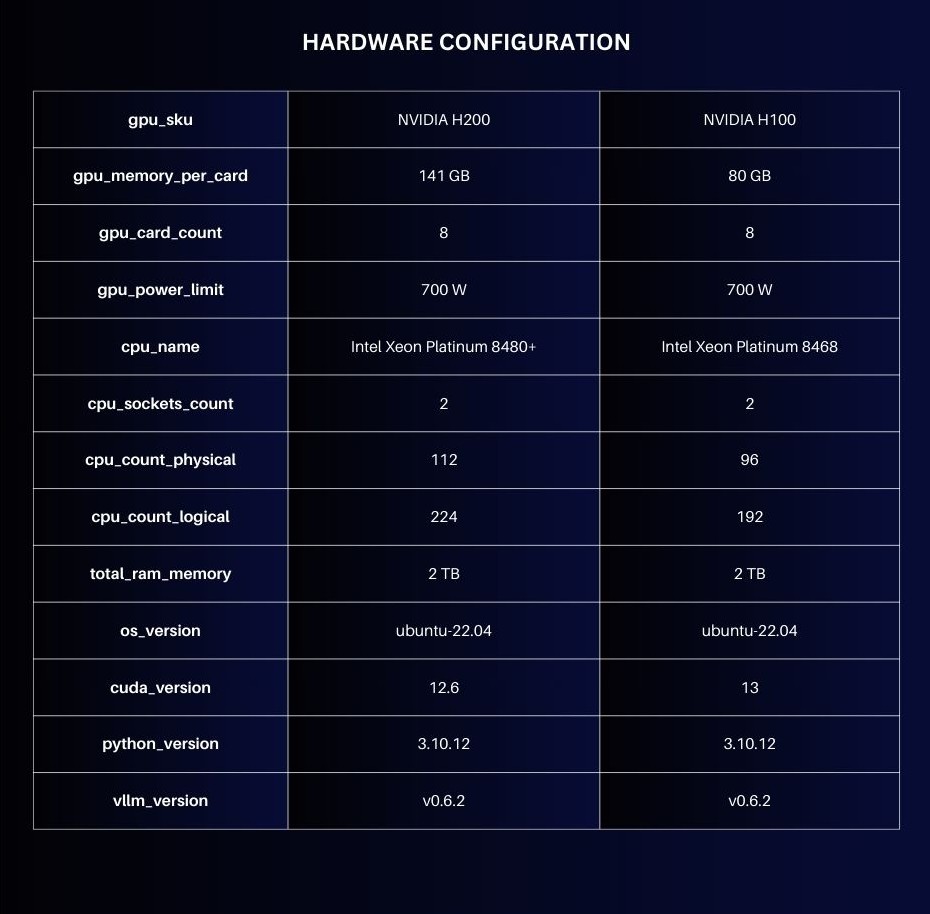

We selected the Dell PowerEdge XE9680 equipped with eight NVIDIA H200 GPUs for our solution due to its exceptional performance and memory capacity, which is crucial for handling the latest high parameter count large language models. With 141GB of HBM3e memory per GPU, representing a significant 60% increase from the H100’s 80GB, we can efficiently run and serve multiple instances of leading large language models. Memory and compute-intensive workloads, such as concurrent model serving and real-time content generation, are streamlined using a single hardware system with eight H200 GPUs. The server’s support for Broadcom ethernet network adapters ensures high-speed data transfer and efficient network connectivity for distributed workloads.

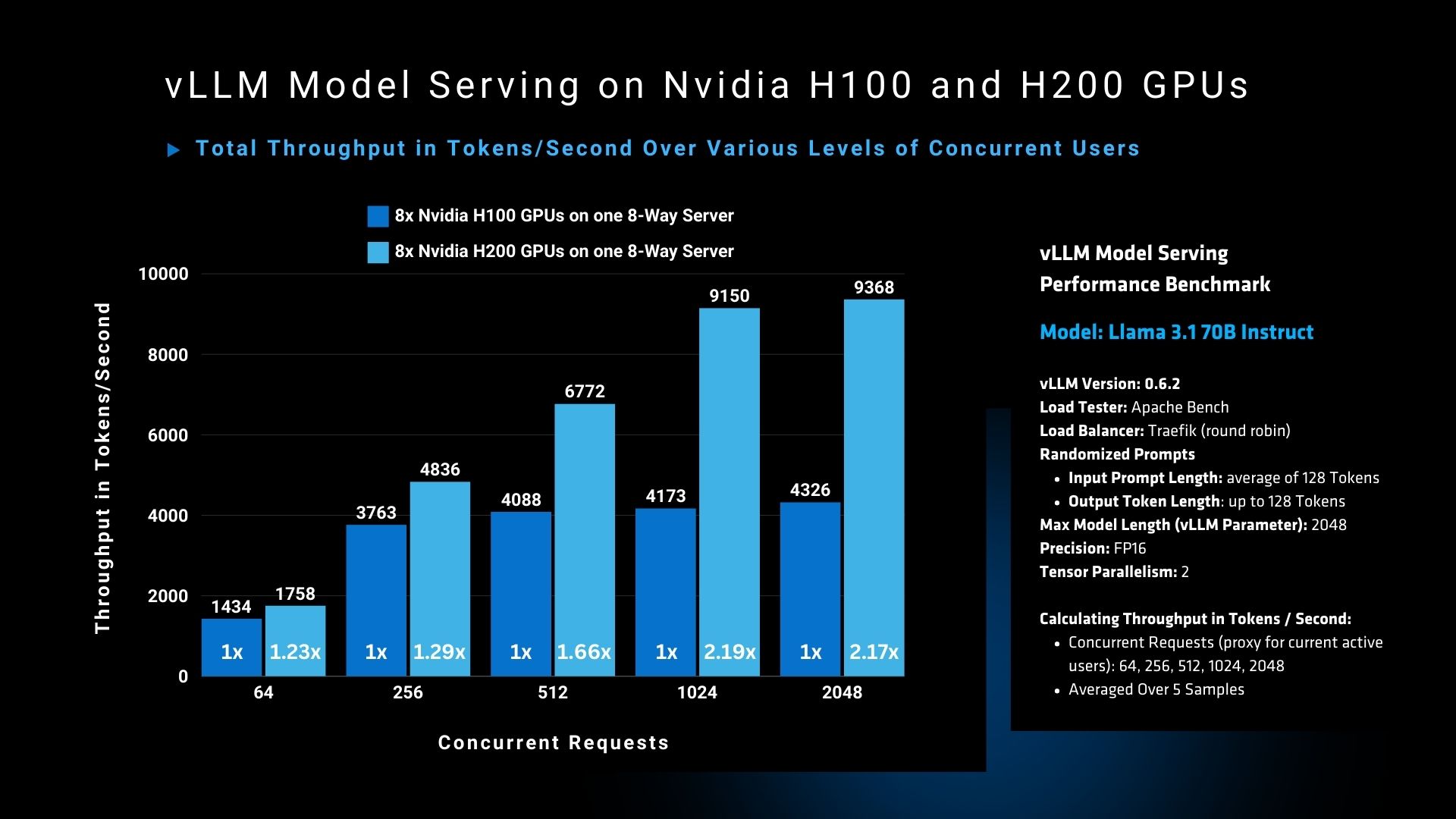

Performance testing of vLLM model serving on Nvidia H100 and H200 GPUs highlights that the H200 provides a substantial throughput advantage over the H100, particularly in high concurrency situations.

- Throughput and Concurrency Relationship: Tests were conducted by varying the number of concurrent requests, a variable used as a proxy for current active users. A typical industry threshold for interactive applications is 10 tokens per second. Lower concurrent requests, such as 512 users, yield a throughput exceeding 10 tokens per second, making this configuration ideal for real-time, interactive applications like chatbots. In contrast, high concurrency levels (1,024 and above) yield a throughput of less than 5 tokens per second, which is better suited for batched processing where immediate response isn’t required.

- H200’s Superior Performance: Across all concurrency levels, the H200 outperforms the H100 significantly, delivering between 1.2x and 2.19x the throughput of the H100. This difference becomes especially pronounced at higher concurrency levels, where the H200 consistently achieves more than double the tokens per second throughput compared to the H100, reaching up to 9368 tokens per second for 2048 concurrent requests, versus the H100’s 4326 tokens per second.

This performance improvement is achievable due to the enhanced memory capacity and next-generation Transformer Engine in the H200 GPU, combined with the robust architecture of the Dell PowerEdge XE9680 server.

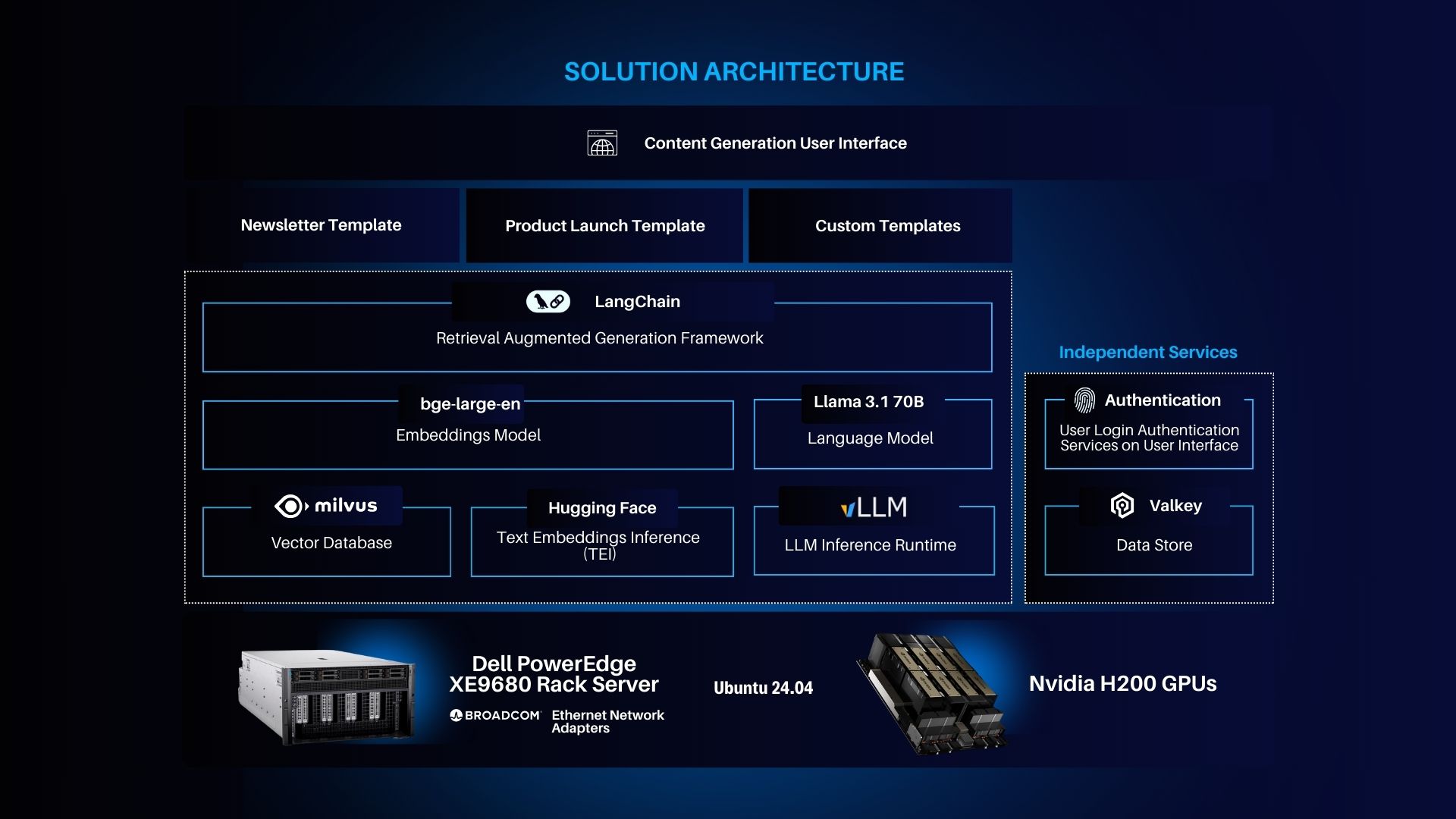

To deliver an industry-specific Gen AI content creation solution, we integrated several critical components as shown in the architecture below, including advanced text generation models, embeddings models, and a vector database. The superior memory capacity and performance characteristics of the Dell PowerEdge XE9680 with NVIDIA H200 GPUs make it possible to support this extensive software stack without compromising accuracy or efficiency.

| Solution Architecture

This solution leverages the following technologies:

- Content Generation via LLMs and Retrieval-Augmented Generation:

Large language models (LLMs) are at the core of this architecture, enabling the generation of high-quality, contextually relevant content. By integrating retrieval-augmented generation (RAG), the system can dynamically pull from external data sources to enhance the accuracy and relevance of the generated outputs. - Plug-and-Play Modular Architecture:

The architecture enables seamless integration of various critical components such as embedding models, vector databases, and LLMs. This modular approach allows for the easy addition of new models and data processing tools to adapt to evolving requirements. - High-Performance and Scalable Hardware Setup:

To meet the demands of real-time content generation and retrieval tasks, the system runs on powerful hardware, including NVIDIA GPUs and Dell PowerEdge servers, ensuring low-latency and scalable operations.

To enable these features, the software stack includes the following key components:

- LangChain: A framework for retrieval-augmented generation (RAG) that connects LLMs with external data sources to improve response accuracy and relevance.

- bge-large-en (Embeddings Model): A high-ranking text embedding model that converts text into dense numerical representations for tasks like semantic search and document similarity.

- LLaMA 3.1 70B (Language Model): An advanced, open-weight large language model with 70 billion parameters, optimized for generating natural language text and understanding queries.

- Hugging Face APIs: Provides tools for text embedding and inference, facilitating the deployment and real-time serving of models.

- vLLM (LLM Inference Runtime): A PyTorch-based runtime for efficiently serving LLMs, ensuring optimized model inference and high throughput.

- Milvus (Vector Database): An open-source vector database designed for high-performance embedding storage and similarity search, critical for fast retrieval in RAG workflows.

- Authentication Services: Ensures secure access control, protecting interactions between users, agents, and the various components of the system.

- Valkey (Data Store): A secure and efficient data storage solution for handling authentication data storage.

| Solution Overview

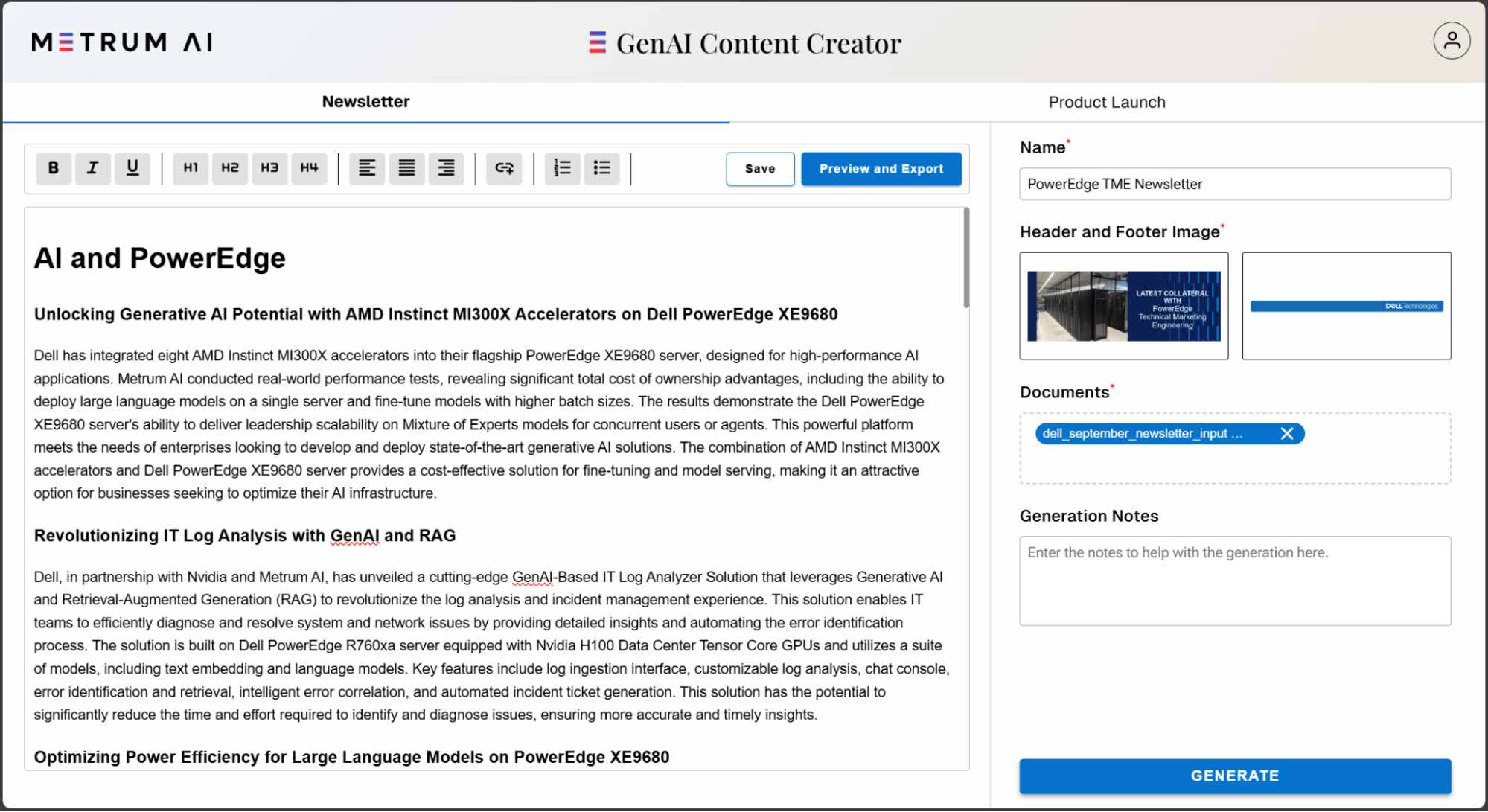

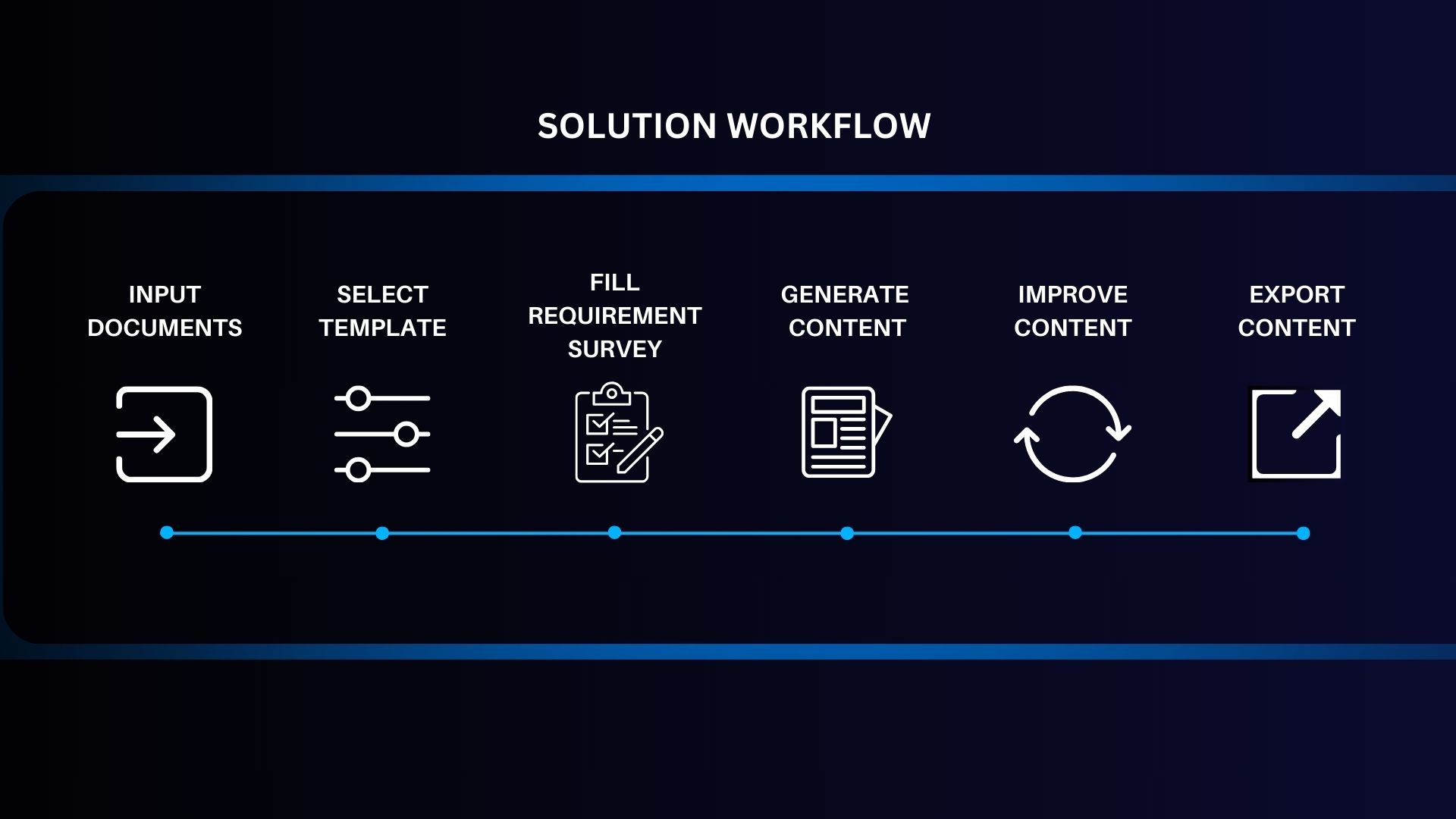

This solution provides a streamlined interface for users to efficiently generate high-quality content. Users can select from various content templates, such as “Generate Newsletter” or “Product Launch,” and customize key inputs like the name of the document and header/footer images. Documents can be uploaded as reference materials, through which a RAG pipeline pulls relevant information for content generation.

The tool also includes a preview and export as HTML feature, giving users a quick way to view and finalize their content. After entering any additional generation notes, users can click “Generate” to instantly create concise and well-structured content, reducing manual effort while maintaining flexibility for personalization. The image below depicts the user interface of the Gen AI Content Creator, through which users can upload reference documents and images, generate content, and edit their content in-line.

The image below illustrates each segment of the workflow, and details how the RAG-based agentic workload and vector database interact with the inputted reference documents to generate the final content.

As demonstrated in our implementation, enterprises can now leverage Generative AI to enhance content creation and documentation processes, while maintaining control over their proprietary data and workflows. Dell’s flagship PowerEdge XE9680 server, equipped with NVIDIA H200 Tensor Core GPUs, provides the robust computing power needed to support these sophisticated content generation and document processing use cases.

In this blog, we demonstrated how enterprises can leverage their existing content and documentation to harness advanced generative AI capabilities in the context of a comprehensive content creation tool. We explored the capabilities of the Dell PowerEdge XE9680 server equipped with NVIDIA H200 Tensor Core GPUs, achieving the following milestones:

- Tested Nvidia H200 GPU vs Nvidia H100 GPU performance in a vLLM model serving scenario with Llama 3.1 70B in FP16 precision.

- Developed an enterprise-grade content generation solution utilizing large language models and customizable templates.

- Implemented an intuitive, flexible system that streamlines newsletter creation and product documentation, with specialized focus on Dell TME content and PowerEdge product launches.

To learn more, please request access to our reference code by contacting us at contact@metrum.ai.

| Addendum

| Performance Testing Methodology

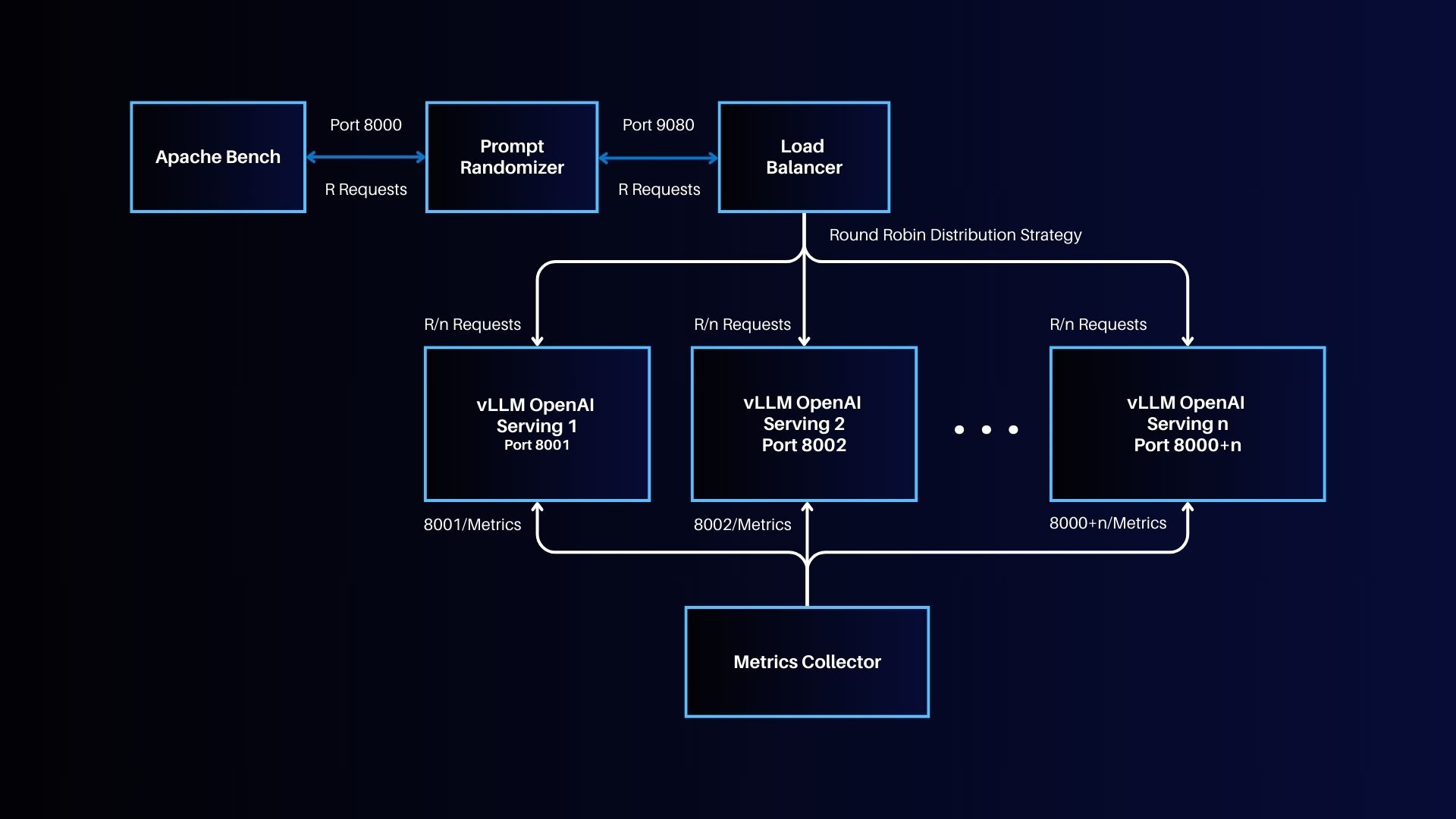

Our performance benchmarking architecture, as shown in the diagram above, consists of four key components: Apache Bench for load testing, a Prompt Randomizer for generating diverse inputs, Traefik as a load balancer, and multiple vLLM Serving Replicas. vLLM (Virtual Large Language Model) is an open-source library designed to optimize the deployment (inference and serving) of large language models (LLMs), which addresses the challenges of high computational demands and inefficient memory management typically associated with deploying LLMs in real-world, client-server applications. To achieve this, vLLM implements a number of optimizations including dynamic batching. Here, we use vLLM 0.6.2 to reflect the common approach enterprises take to deploy AI.

Traefik serves as our load balancer, efficiently distributing input requests from Apache Bench across the multiple vLLM serving replicas. Each replica is configured to load the model with specified tensor parallelism. The Apache Bench, working through the prompt randomizer, accesses Traefik at port 9080, which then distributes requests among the vLLM replicas using a round-robin strategy.

We deployed Llama-3.1-70B-Instruct using vLLM 0.6.2 with FP16 precision, and conducted performance testing by varying the number of concurrent requests using Apache Bench with the following values: 1, 2, 4, 8, 16 … 4096, 8192. We also employed a prompt randomizer component which substitutes a random prompt from a pool of “k” prompts, configurable to millions of randomized prompts from a given pool, to simulate real-world concurrent user activity.

Our testing parameters are also carefully chosen to reflect real-world scenarios. We used an input prompt length of approximately 128 tokens, with a maximum of 128 new tokens generated and a maximum model length of 2048. We collected our final throughput metrics by taking an average over five samples and used eight vLLM servers with a tensor parallelism of 2.

To monitor performance, we employ a Metrics Collector module that gathers production metrics from each vLLM server’s /metrics endpoint. This comprehensive setup allows us to thoroughly evaluate the performance of H200 and H100 GPUs in handling LLM workloads, providing valuable insights for enterprise AI deployments.

| Hardware Configuration Details

| Additional Criteria for IT Decision Makers

| What is RAG, and why is it critical for enterprises?

Retrieval-Augmented Generation (RAG), is a method in natural language processing (NLP) that enhances the generation of responses or information by incorporating external knowledge retrieved from a large corpus or database. This approach combines the strengths of retrieval-based models and generative models to deliver more accurate, informative, and contextually relevant outputs.

The key advantage of RAG is its ability to dynamically leverage a large amount of external knowledge, allowing the model to generate responses that are informed not only based on its training data but also by up-to-date and detailed information from the retrieval phase. This makes RAG particularly valuable in applications where factual accuracy and comprehensive details are essential, such as in customer support, academic research, and other fields that require precise information.

Ultimately, RAG provides enterprises with a powerful tool for improving the accuracy, relevance, and efficiency of their information systems, leading to better customer service, cost savings, and competitive advantages.

| What are AI agents, and what is an agentic workflow?

AI agents are autonomous software tools designed to perceive their environment, make decisions, and take actions to achieve specific goals. They utilize artificial intelligence techniques, such as machine learning and natural language processing, to interact with their surroundings, process information, and perform tasks with varying degrees of independence and complexity.

An agentic workflow in AI refers to a sophisticated, iterative approach to task completion using multiple AI agents and advanced prompt engineering techniques. Unlike traditional single-prompt interactions, agentic workflows break complex tasks into smaller, manageable steps, allowing for continuous refinement and collaboration between specialized AI agents. These workflows leverage planning, self-reflection, and adaptive decision-making to achieve higher accuracy and efficiency in task execution. By employing multiple AI agents with distinct roles and capabilities, agentic workflows can handle complex problems more effectively, often producing results that are significantly more accurate than conventional methods. This approach represents a shift towards more autonomous, goal-oriented AI systems capable of tackling intricate challenges across various domains.

| What are some of the typical types of LLM context window scenarios and requirements for various RAG applications?

| Scenario | Use Case Examples | Token Lengths | Dell 9680 8xH200 Advantages |

|---|---|---|---|

| Long Input Sequences | Summarizing lengthy reports, analyzing large datasets | 10,000 - 50,000 tokens | Efficiently processes high token counts without latency spikes |

| Large Models | Complex content generation, intricate data analysis | 2,000 - 5,000 tokens per request | Supports large models with high precision |

| Large Batch Sizes | Bulk data processing, large-scale content generation | 500 - 2,000 tokens per item in batch | High throughput for batch-oriented tasks |

| Standard Inference | Short-form responses, chatbot replies | 100 - 500 tokens | Suitable for standard tasks but excels in high-token scenarios |

| References

Dell images: Dell.com

Copyright © 2024 Metrum AI, Inc. All Rights Reserved. This project was commissioned by Dell Technologies. Dell and other trademarks are trademarks of Dell Inc. or its subsidiaries. Nvidia and combinations thereof are trademarks of Nvidia. All other product names are the trademarks of their respective owners.

***DISCLAIMER - Performance varies by hardware and software configurations, including testing conditions, system settings, application complexity, the quantity of data, batch sizes, software versions, libraries used, and other factors. The results of performance testing provided are intended for informational purposes only and should not be considered as a guarantee of actual performance.